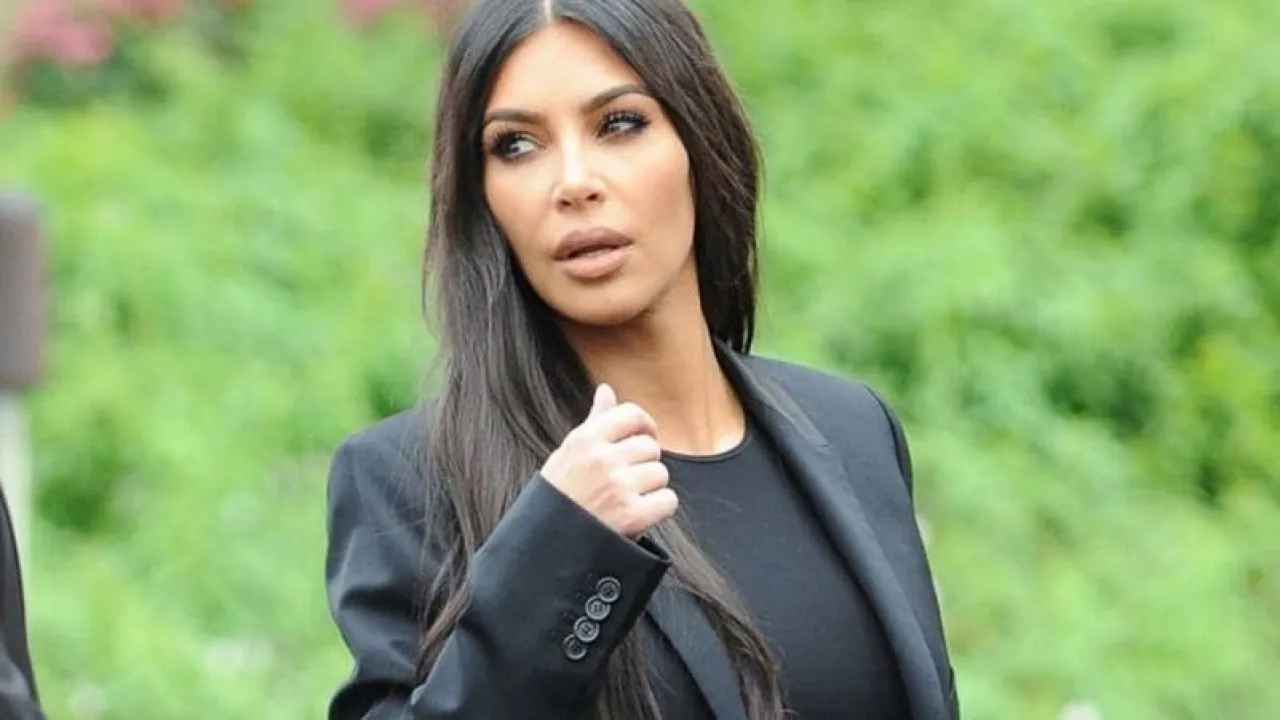

Khaberni - American reality star Kim Kardashian blamed artificial intelligence, specifically GPT Chat, after revealing that she failed some of her law exams due to relying on inaccurate answers provided by the smart assistant.

Kardashian, who has been studying law for years, said this during a video interview with "Vanity Fair", during which she underwent a lie detector test, and said she used GPT Chat during study sessions and exams, but later discovered that much of the information she relied on was incorrect.

This coincided with a rumor about banning legal advice

Kardashian's comments came at a time when rumors spread online that OpenAI had disabled GPT Chat’s ability to provide legal or medical advice.

However, the company quickly denied these allegations, clarifying that the terms mentioned in the user agreement were not new, but only aimed to emphasize that GPT Chat is not a substitute for licensed experts in these fields.

Karan Singhal, responsible for the health intelligence sector at "OpenAI", posted on the "X" platform that what happened was a misunderstanding of an old clause that had been added a while ago and does not include any new restrictions on legal or medical questions.

The trap of overconfidence in artificial intelligence

Kardashian's incident highlights a common problem among users of artificial intelligence: overconfidence in answers that seem precise and well-organized, but are often wrong or purely imagined.

Experts point out that the danger does not only lie in the incorrect information, but also in the confident manner in which the system presents it, which can easily lead users to believe it.

While GPT Chat can simplify and explain legal or medical concepts in straightforward language, it cannot be relied upon as an official source or a substitute for specialists. The platform itself warns against using its answers in areas requiring a professional license, such as law or medicine.

Kim Kardashian's story shows that neither wealth nor fame protects anyone from the mistakes of artificial intelligence, as even experienced users can fall into the trap of absolute trust in technology, making it necessary to always verify information before relying on it, especially in legal or health matters where error is not an option.