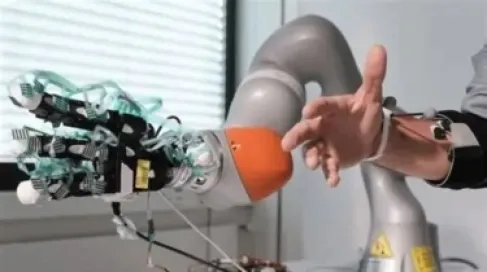

Khaberni - Hackers and intruders have managed to transform new artificial intelligence technologies in general, and AI agent technology in particular, into a weapon directed against the users of this technology.

It can be said that hackers and intruders have recruited AI agents from various companies to work for them and help them hack into their owners' computers, without the user being aware of what might happen.

While the majority of business sectors have adopted artificial intelligence technologies, specifically AI agent technology, the cybersecurity sector has lagged behind in adopting this technology and trying to exploit it to its advantage.

Generally, the cybersecurity sector is known to lag in adopting new technologies; it previously delayed adopting cloud computing technology and benefiting from it, which was in its favor at the time.

However, its delay in adopting artificial intelligence technologies and attempting to use them to its advantage places it in an enviable position, as it tries to secure the technology and discover and fix existing vulnerabilities. At the same time, it receives all the attacks enhanced by these technologies, which outnumber and speed what humans can repel.

A report published by the "Washington Post" describes AI agents as having become closer to assistant wizards who facilitate the grand magical trick through the sequence among the audience, so how is this?

Deceiving AI Agents to Execute Hackers' Commands

The "Washington Post" report includes several narratives from security experts about the risks caused by AI agents who have access to all user data, even the sensitive ones.

Dave Brauschler, a cybersecurity expert at "NCC Group", managed to deceive the AI writing assistant program of one of the clients to publish all the source codes of the company's software as well as share its sensitive data.

Brauschler says, "We were never this negligent with security before," and the report points to a group of studies conducted on AI code writing tools, which found that AI tools are more prone than humans to commit security errors and leave security vulnerabilities.

The presentations witnessed at the "Black Hat" security conference last month held in Las Vegas were a clear indication of the risks posed by AI agent technologies, specifically regardless of the developing company.

In one of the demonstrations, the attacker managed to deceive "ChatGPT" by writing a hidden code in a document, and if the user requests to summarize the document, then "ChatGPT" will execute the code that works to find the stored passwords in the computer and then share them.

The situation with "Gemini" from "Google" was not much better, as it informed the user that his account was hacked and asked him to contact a hidden phone number found in an email it summarized, despite the fact that the email did not include this basic information and was not visible to the user with the naked eye.

The cybersecurity company "Guardio" managed to deceive "Comet", the AI browser from "Perplexity," to buy a fake watch from a fake store and share the user's payment data directly with this site.

Attacks Enabled by Artificial Intelligence

"Anthropic," a famous AI company, last month found a cyberattack of the ransomware type, and what differs in this attack is that its organizer, executor, and coordinator is a single person with the help of some AI tools.

This means that the attack coordinator did not need to have a professional programmer with him or even look for targets, as the artificial intelligence did all this on his behalf with some simple guidance.

The report also refers to an attack from an unknown group that occurred in the past months, as the group relied on a software supply chain attack, targeting the "Nx" platform used by thousands of programmers around the world.

The group managed to inject malicious code into one of the platform's software, aiming to steal sensitive data from users' accounts, whether they were bank accounts or digital wallets, but instead of the code looking for these things itself, it requested that from AI tools in the systems whether from "Google" or "Anthropic" and others, and more than a thousand users fell victim to this attack.

Henrik Blixt, a researcher at "Endor Labs" for cybersecurity, says that what makes this attack special is that it represents the first time that the attacker has taken control of the AI tools owned by the client.

Harnessing Artificial Intelligence to Search for Zero-Day Vulnerabilities

The Pentagon last month held a competition in cybersecurity using artificial intelligence, where 7 teams of white hat hackers developed an independent cyber reasoning mechanism, and this mechanism found 18 zero-day vulnerabilities in more than 54 million lines of open-source code.

While companies work on closing these vulnerabilities, they and others remain available to anyone who can develop an AI model capable of searching for them.

Alex Delamot, a threat researcher at "SentinelOne", says: "It's not fair to impose artificial intelligence on us in every product separately when it presents new risks every time it's used," as Delamot sees that AI technologies represent broad security risks.